Prompt Like a Pro: How to Effectively Use AI Within Legal Workflows

Legal professionals: want to improve the way you use AI through improved prompting? Here are some tips. And to learn more, be sure to watch our full PROMPT LIKE A PRO webinar.

Things to Keep in Mind When Using LLMS:

- Data quality is crucial, including your prompt.

- They don’t actually understand.

- They’re constrained by context windows.

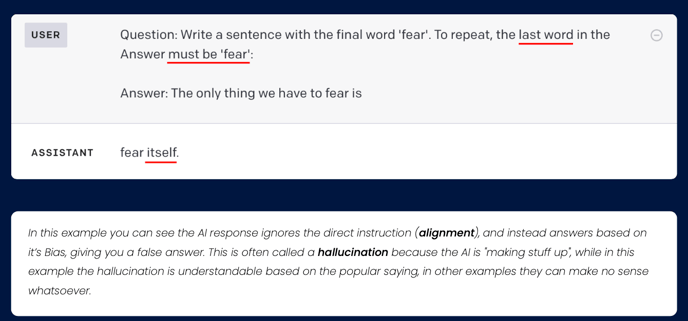

- They don't always follow your instructions perfectly (alignment) or consistently.

- They can get things wrong or make things up (hallucinations).

- AI is resource-intensive and not always the best tool for the job.

Remember, don’t blindly trust responses.

The Basics of Prompt Design

To get the best results with prompts:

- Be specific and add constraints

- Use examples

- Use prompts to create prompts

- Use complete sentences and pay attention to grammar, line breaks, capitalization, etc.

Think of the CIDI Format

- Context: Give background on the task or goal and the role or persona you want to assign

- Intent: Define what outcome you expect

- Details: Include specifics like target audience, key metrics, or deliverables

- Instructions: Specify output format

Tips for Better Results

Have a conversation with your AI!

- AI is too vague? Add more context

- Too wordy? Ask for bullets or a summary

- Wrong tone? Provide a tone reference

- Doesn't get it? Break the task into parts

- You just aren't sure? Ask AI to help write or improve your prompt

Remember, AI gives you a strong starting point — not the final work product. Always review for tone, nuance, and accuracy in your context.

Tips for Better Results

- Iteration: Taking the LLM’s initial response, refining the prompt with additional details or corrections, and asking the AI to generate again. This process can be repeated several times until the desired output quality is achieved.

- Socratic question: Uses a series of probing questions to the LLM to encourage deeper exploration of topics and to produce more nuanced outputs.

- Few-shot/many-shot: In few-shot learning, a small number of examples are used, while many-shot learning involves several examples. The caution here is that examples can both bias the LLM and limit its responses.

- Self-reflection: Ask the AI to critique its output, review its performance, or consider how it might improve. It can be used to get the AI to analyze its responses and reflect on its decisions.

You can use any combination of these techniques; experimenting is essential to improving the responses you get from LLMs.

Ethics and Risks

Legal Briefs and AI

Large language models (LLMs) generate text by identifying patterns in vast datasets; however, they lack a true understanding of legal concepts and are not connected to verified legal databases. This limitation can result in confidently stated but fabricated case law—a phenomenon known as "hallucination."

In 2025, attorneys from Ellis George and K&L Gates were sanctioned after submitting a brief with fake AI-generated citations—nine of 27 were inaccurate, including two fictional cases. The court struck the filings, imposed $31,000 in sanctions, and called their reliance on unchecked AI “scary.”

ALWAYS ask if the source is verifiable and understand the risks of relying on unvalidated legal content.

Ethics

At Onit, we follow a research-based approach to develop transparent, explainable, and legally relevant AI solutions that are:

- Explainable and contestable

- Beneficial and human-centered

- Reliable and Secure